One of the big topics at the 2025 Affiliate Summit East conference our team is attending is, unsurprisingly, AI. There’s virtually no avoiding artificial intelligence at this point, even if you’re actively trying to. Smart professionals aren’t trying to avoid it though.

The key is to find effective ways to leverage AI, to use it in a way that drives results and doesn’t feel, well, sketchy. That’s what we’re doing at Stack. Not just in our affiliate and performance marketing work but across the business. Ahead of ASE we thought we’d share how the team thinks about applying AI intentionally, strategically, and without losing the human edge.

AI Is a Smart Collaborator, Not a Replacement

Let me start by saying AI is not an ideal tool for disengaged work. That sounds ironic given the self-driving nature of these tools, but it’s not helpful to use AI as a replacement for thinking. The broadest way to describe Stack’s approach is to treat these LLMs like super-intelligent friends who are also very subservient.

These systems can be leveraged for any problem we face to varying degrees, but I prefer to use them most frequently as a quick knowledge resource or thought partner. Targeted problem-solving—those instances where you see the finish line but are blocked by an unpassable object—are more appropriate. They’re most impactful for my own work when used to bridge the gap between a solution I can envision and the resources needed to accomplish it. Whether that’s encyclopedic knowledge, time, or skills, AI has been valuable for expediting both straightforward, two-hour tasks and complex, multi-week projects.

For instance, I love asking an AI chatbot if something I’m about to say sounds convoluted or if I have a good read of a situation. Another example? “If a Google Sheets formula that accomplished what I needed even existed, I wouldn’t possibly be able to figure out how to make it work with my data” is the type of problem I would throw at AI.

What AI’s Great At (And What Still Needs a Human)

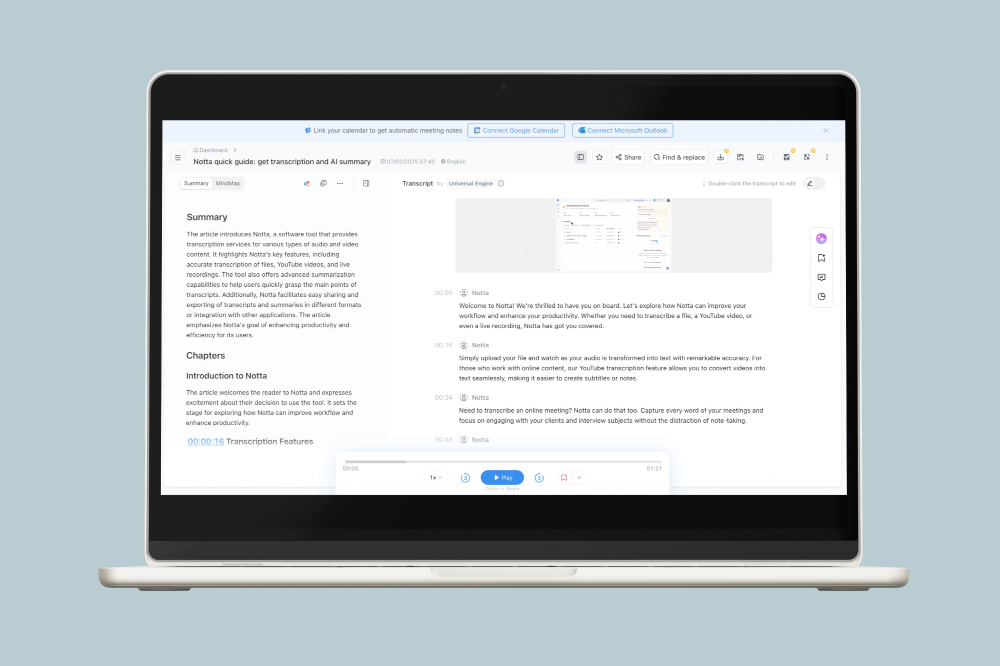

The most valuable use cases for AI at Stack aren’t flashy—they’re the behind-the-scenes ones. Think: transcription, summarization, triaging bugs, scaling up repetitive analysis. AI helps us move faster when we already know what we’re doing.

For example, we’re using AI to quickly generate summaries of publisher performance, flag technical issues that follow repeatable patterns, and spin up lightweight reports from complex datasets. We also use machine learning for fraud prevention and have leveraged this for dynamic on-site personalization through various partners. Similarly, our help desk has a component that is powered by these newer LLMs and AI systems.

These aren’t glamorous applications, but they save hours every week. High-throughput individual contributors can now complete a project in 10–20% of the time it would have taken them in the past.

AI is good at recognizing when things don’t work, too. We’ve fed it bug reports to help cluster and prioritize tickets faster, which lets our product team focus more on fixes and less on sifting through noise.

Having a fresh set of eyes to challenge your opinion on why something is happening or how to make something work more efficiently is an ideal use of AI.

AI’s Ongoing Shortcomings

Where it tends to fall apart? Anything that needs empathy, taste, or deep contextual awareness.

AI is still not great at drafting the first version of creative content, especially when it needs to align with a brand voice or handle nuanced topics. Same goes for interpreting user feedback or complex product issues that don’t follow a clear pattern. Sometimes, a summary can help you quickly get your bearings (like with bug tickets), but it doesn’t mean you won’t need to review the original input manually to get your job done well. All of these things—and more—are still human territory.

This may be common knowledge, but it bears repeating: Throughout any chatbot use, it’s essential not to steer the AI toward conclusions you want; instead, set the context, provide the data, and then engage in a conversation.

The Stack Playbook for Evaluating AI Tools

We’ve tested a lot of tools. Honestly, most of them don’t make it past the demo phase. There are hundreds of AI-powered SaaS products out there solving problems no one actually has. Sometimes it feels like a race to bolt GPT onto everything, whether it adds value or not.

Our approach to evaluating new tools is simple: Does it remove a blocker? Does it make someone faster or sharper at a task they already do? If not, it’s probably not worth it.

That same mindset applies to tracking AI models themselves. Since the companies behind the major models now run Apple-style keynotes, we usually go straight to the source and keep an eye on the content released by teams like Anthropic, OpenAI, or open-source groups putting out frontier-level models like Deepseek or MoonshotAI. There are also public leaderboards and stealth tests where you can see how different models perform side by side. It helps you cut through the clutter and form a healthy opinion of what’s useful versus what’s hype.

We’ve had the most success integrating AI capabilities into workflows we already own. That might mean plugging AI into our analytics process to accelerate pattern recognition or using it as a QA buddy before launching a tool. But if something feels like a solution in search of a problem, we let it go.

A Big Opportunity for Affiliates Right Now

One area we’re especially excited about is helping creators and publishers do more with less.

AI is making it easier for affiliate partners to build custom experiences, whether that’s a wishlist generator, product roundup page, or a mobile-optimized product feed. These tools aren’t new, but AI is accelerating how fast creators can spin them up and personalize them.

The key here is that the vision still has to come from the humans. We can give creators templates and prompts or even generate the skeleton of a mini-shop. But the magic happens when they bring their own POV and audience knowledge to the table. AI powers the front end and lowers the effort to test, optimize, and iterate, but people still steer the strategy and creativity.

Thoughtful Tech > Flashy Features

You don’t need to be an AI expert to benefit from the tech, but you do need to be thoughtful. At Stack, we’re not chasing buzz or trying to automate everything. We’re looking for the moments where AI removes friction—and then implementing in the smartest, safest ways we can.

That means we’re not just throwing generative features into the wild without a plan. If we were to add something like AI-generated review summaries to every product page, we’d approach it deliberately. That includes embedding brand guidelines into the prompt, using negative prompting to avoid tone issues, and caching responses to control cost. Guardrails matter when you’re working with models this powerful. Even behind-the-scenes tools deserve responsible oversight.

The best business strategies are still deeply human. But if AI can take the grunt work off your plate, why wouldn’t you let it?